You can also read my newsletters from the Substack mobile app and be notified when a new issue is available.

Building a data lake on AWS might sound daunting, but it doesn’t have to be.

With the right approach, you can quickly set up a scalable, secure, and cost-effective data lake.

Here’s a step-by-step guide to get you started.

Understanding the Basics

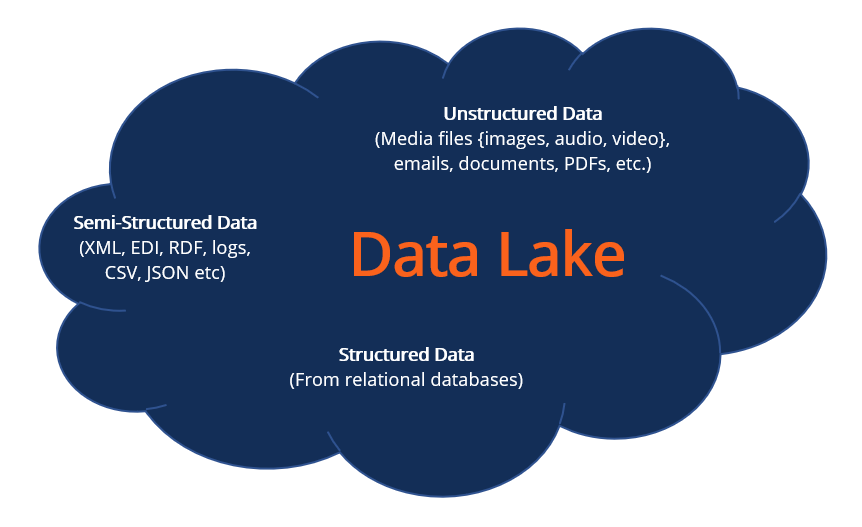

A data lake is a centralized repository that allows you to store all your structured and unstructured data at any scale.

Unlike traditional databases, data lakes can handle raw data, transforming it only when needed.

Services like Amazon S3, AWS Glue, and Amazon Athena make this process straightforward on AWS.

Setting Up Amazon S3

Amazon S3 (Simple Storage Service) is the backbone of your data lake. It’s a highly scalable and durable service where you store your data.

Here’s what to do next:

Create an S3 Bucket: Go to the S3 console and create a new bucket. Name it something relevant, like

my-data-lake.Organize Your Data: Use folders (prefixes) within your bucket to organize data by type or source, e.g.,

/raw-data/and/processed-data/.Set Permissions: Ensure your bucket policies and access controls are correctly set to secure your data.

For example, create a bucket named my-data-lake and organize it with folders like /sales-data/, /logs/, and /user-data/.

Ingesting Data with AWS Glue

AWS Glue is a fully managed ETL (extract, transform, load) service that makes it easy to prepare and load your data for analytics.

To get started with AWS Glue:

Create a Glue Crawler: Go to the Glue console and create a crawler. Point it to your S3 bucket.

Run the Crawler: The crawler will scan your data and create a metadata catalog describing your data's structure.

Set Up ETL Jobs: Create ETL jobs in Glue to transform your raw data into a more analytical format.

For instance, you can create a crawler to scan the /raw-data/ folder and set up an ETL job to transform the raw logs into a structured format.

Querying Data with Amazon Athena

Amazon Athena is an interactive query service that makes it easy to analyze data in S3 using standard SQL. There’s no need to set up or manage any infrastructure.

Here are 3 steps to get started:

Connect Athena to Glue: Go to the Athena console, and under settings, connect to your AWS Glue Data Catalog.

Write SQL Queries: Use SQL to query your data directly from S3. For example, “SELECT * FROM sales_data WHERE region = ‘US’.”

Visualize Results: You can save your query results to S3 or visualize them using tools like Amazon QuickSight.

For example, to analyze sales data, write a query like SELECT COUNT(*) FROM sales_data WHERE sale_date BETWEEN '2023-01-01' AND '2023-06-30'.

Securing Your Data Lake

Security is crucial when managing a data lake. AWS provides several tools to ensure your data is safe.

Here’s what to do next:

Enable S3 Encryption: Ensure that all data stored in S3 is encrypted using S3-managed keys or your own.

Set Up IAM Policies: Use AWS Identity and Access Management (IAM) to control access to your data. Create policies that grant the least privilege necessary.

Monitor with CloudWatch: Use AWS CloudWatch to monitor access to your data and set up alerts for any unusual activity.

For instance, enable server-side encryption (SSE) for your S3 bucket and create an IAM policy that only allows specific users to access the /processed-data/ folder.

Automating Data Processing

Automating data processing can save time and reduce errors. AWS Lambda, a serverless computing service, can help with this.

To automate your data processing:

Set Up Lambda Functions: Write Lambda functions to automate data transformation and loading tasks.

Trigger on S3 Events: Configure S3 to trigger Lambda functions when new data is uploaded.

Manage Workflows with Step Functions: Use AWS Step Functions to create workflows orchestrating multiple Lambda functions.

For example, set up a Lambda function that triggers when a new file is uploaded to /raw-data/ and processes it into /processed-data/.

Monitoring and Maintenance

Regular monitoring and maintenance ensure your data lake remains efficient and secure. AWS offers tools like CloudWatch and AWS Config to help.

Here’s how to monitor your data lake:

Use CloudWatch Metrics: Set up CloudWatch to monitor the performance and usage of your data lake.

Set Up Alarms: Create alarms to notify you of any performance issues or unusual activities.

Regularly Review Policies: Review and update your IAM and bucket policies to ensure they align with best practices.

For example, create a CloudWatch alarm to notify you if the number of failed Glue jobs exceeds a certain threshold.

Final Thoughts

Building a data lake on AWS doesn’t have to be overwhelming.

Following these steps, you can set up a robust, scalable, and secure data lake.

Start with S3 for storage, use Glue for data cataloging and transformation, and leverage Athena for querying.

Always ensure your data is secure and automate processes to save time and reduce errors.

Don't forget to follow me on X/Twitter and LinkedIn for daily insights.

That’s it for today!

Did you enjoy this newsletter issue?

Share with your friends, colleagues, and your favorite social media platform.

Until next week — Amrut

Posts that caught my eye this week

Issue #62 by

Statically and Dynamically Linked Go Binaries by

How to solve a Code Review Exercise by

Whenever you’re ready, there are 2 ways I can help you:

Are you thinking about getting certified as a Google Cloud Digital Leader?

Here’s a link to my Udemy course, which has helped 617+ students prepare and pass the exam. Currently, rated 4.24/5. (link)

Course Recommendation: AWS Courses by Adrian Cantrill (Certified + Job Ready):

ALL THE THINGS Bundle (I got this and highly recommend it!)

Get in touch

You can find me on LinkedIn or X.

If you wish to request a topic you would like to read, you can contact me directly via LinkedIn or X.