You can also read my newsletters from the Substack mobile app and be notified when a new issue is available.

Mastering MLOps on AWS might seem daunting, but with a structured 30-day plan, you can build a solid foundation.

In today’s newsletter edition, I designed an MLOps roadmap for beginners.

It offers actionable steps to help you navigate the complexities of MLOps and leverage AWS services effectively.

But before we begin, do you want to understand how writing can unlock massive opportunities and help you grow professionally?

Then, I have something special for you today.

The Ultimate Guide To Start Writing Online by Ship 30 for 30.

Nicolas Cole and Dickie Bush, the creators of Ship 30 for 30, put this 20,000-word helpful guide to explain the frameworks, techniques, and tools to generate endless ideas, build a massive online audience, and help you get started. They give it all away for FREE!

You can download it here.

I would love to know if this excites you to start writing online.

P.S. This guide encouraged me to sign up for their writing course. :)

Ok, now back to the newsletter edition for this week.

Day 1-5: Understanding MLOps Fundamentals

Before diving into AWS tools, it's essential to grasp the basics of MLOps.

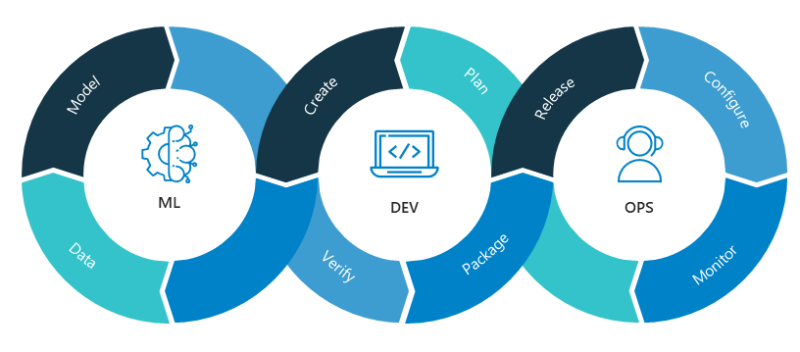

MLOps, short for Machine Learning Operations, is the practice of deploying, monitoring, and maintaining machine learning models in production.

Step 1: Learn the MLOps Lifecycle

The MLOps lifecycle involves data preparation, model training, deployment, and monitoring.

Read introductory articles or watch videos on YouTube to familiarize yourself with each stage. For example, the lifecycle starts with data ingestion and ends with continuous model improvement based on real-world feedback.

Step 2: Explore AWS MLOps Resources

AWS offers many resources on MLOps, including whitepapers, tutorials, and webinars.

Start with the AWS Prescriptive Guidance, which overviews key concepts and best practices.

Day 6-10: Setting Up Your AWS Environment

Now that you understand MLOps, it's time to set up your AWS environment.

Step 1: Create an AWS Account

If you don't already have one, sign up for one. Use the Free Tier to minimize costs as you experiment with different services.

Step 2: Configure IAM Roles and Policies

Set up Identity and Access Management (IAM) roles and policies to secure your environment.

Create an IAM role with the necessary permissions to access AWS machine learning services like SageMaker. This ensures that your models and data are secure from unauthorized access.

Step 3: Familiarize Yourself with AWS SageMaker

AWS SageMaker is a fully managed service that allows every developer and data scientist to quickly build, train, and deploy machine learning models.

Spend some time exploring the SageMaker console and understanding its capabilities.

Day 11-15: Data Preparation and Management

Effective data management is the backbone of any successful machine learning project. In these five days, you'll focus on how to handle data using AWS services.

Step 1: Store Data in S3

Amazon S3 is the go-to storage service for AWS data. Upload your datasets to S3 and organize them using folders and tags. For example, create separate buckets for training, validation, and testing data.

Step 2: Use AWS Glue for Data Preparation

AWS Glue is a serverless data integration service that makes it easy to prepare and transform data for analytics and machine learning.

Set up a Glue job to clean and format your data before feeding it into your machine learning model.

Step 3: Experiment with AWS Data Wrangler

AWS Data Wrangler is an open-source library that extends SageMaker's capabilities.

It enables you to process and analyze data directly from your SageMaker notebook.

Use it to perform exploratory data analysis (EDA) and feature engineering.

Day 16-20: Model Training and Experimentation

Once your data is prepared, it's time to train your model and experiment with different algorithms.

Step 1: Use SageMaker Built-in Algorithms

AWS SageMaker offers a variety of built-in algorithms for tasks like classification, regression, and clustering.

Start by training a model using one of these algorithms. For example, use the XGBoost algorithm for a regression task.

Step 2: Set Up Hyperparameter Tuning

Hyperparameter tuning is critical for optimizing model performance. SageMaker makes this easy with its built-in hyperparameter tuning feature.

Set up a tuning job to automatically find the best hyperparameters for your model.

Step 3: Track Experiments with SageMaker Experiments

SageMaker Experiments helps you manage and track your machine learning experiments. It can log model parameters, metrics, and metadata, making it easier to compare different runs and choose the best model.

Day 21-25: Model Deployment and Monitoring

Deploying and monitoring your model is crucial for ensuring it performs well in production.

Step 1: Deploy Your Model with SageMaker

Deploy your trained model as a SageMaker endpoint. This lets you integrate the model into your applications and make real-time predictions.

For instance, deploy a model that predicts customer churn and incorporate it into your CRM system.

Step 2: Monitor Model Performance with SageMaker Model Monitor

SageMaker Model Monitor automatically monitors your deployed models for data and prediction quality.

Set up tracking jobs to detect data drift, bias, and other issues that could degrade model performance over time.

Step 3: Automate Model Retraining

Use SageMaker Pipelines to automate the retraining of your models when new data becomes available.

This ensures that your models remain accurate and up-to-date without requiring manual intervention.

Day 26-30: Continuous Integration and Continuous Deployment (CI/CD) for MLOps

In the final stretch, you'll focus on integrating MLOps into your CI/CD pipelines for seamless model updates.

Step 1: Set Up a CI/CD Pipeline with AWS CodePipeline

AWS CodePipeline is a fully managed continuous integration and delivery service. Create a pipeline that automates the entire MLOps lifecycle, from data ingestion to model deployment.

Step 2: Integrate SageMaker with CodePipeline

Integrate your SageMaker models into the CI/CD pipeline. For example, a new model training job can be triggered whenever new data is ingested into S3, and the updated model can be automatically deployed to production once it passes validation.

Step 3: Test and Validate the CI/CD Pipeline

Test your CI/CD pipeline to ensure everything works as expected.

Validate each stage, from data processing to model deployment, and make adjustments to optimize performance and reliability.

Final Thoughts

Mastering MLOps on AWS in 30 days is achievable with a structured plan.

Focusing on the fundamentals can help you build a strong foundation in MLOps.

Start implementing these steps today, and you'll be well on your way to becoming proficient in managing machine learning operations at scale.

Don't forget to follow me on X/Twitter and LinkedIn for daily insights.

That’s it for today!

Did you enjoy this newsletter issue?

Share with your friends, colleagues, and your favorite social media platform.

Until next week — Amrut

Posts that caught my eye this week

Is Midjourney Panicking? Free Web Version Launched in Response to Ideogram 2.0! by

Maximise Your Productivity: Harness Hot Reloading in Kubernetes by

Whenever you’re ready, there are 2 ways I can help you:

Are you thinking about getting certified as a Google Cloud Digital Leader?

Here’s a link to my Udemy course, which has helped 617+ students prepare and pass the exam. Currently, rated 4.24/5. (link)

Course Recommendation: AWS Courses by Adrian Cantrill (Certified + Job Ready):

ALL THE THINGS Bundle (I got this and highly recommend it!)

Get in touch

You can find me on LinkedIn or X.

If you wish to request a topic you would like to read, you can contact me directly via LinkedIn or X.

Thanks for the mention