You can also read my newsletters from the Substack mobile app and be notified when a new issue is available.

I offer many free resources. If you haven't already, check out my store at Gumroad.

Three weeks ago, I was preparing for the worst possible conversation with leadership.

Our AWS costs had ballooned 73% in two quarters, and as the infrastructure lead, all eyes were on me.

The confusing part?

Our application load hadn't increased proportionally. Something was deeply wrong with our cloud resource utilization.

The culprit was hiding in plain sight: our Amazon ECS task placement strategies.

After a desperate weekend of hands-on optimization, we reduced our ECS cluster costs by 47% while improving service availability by 23%.

In today’s newsletter issue, I will share everything I learned during that career-saving weekend.

Understanding the Three Pillars of ECS Task Placement

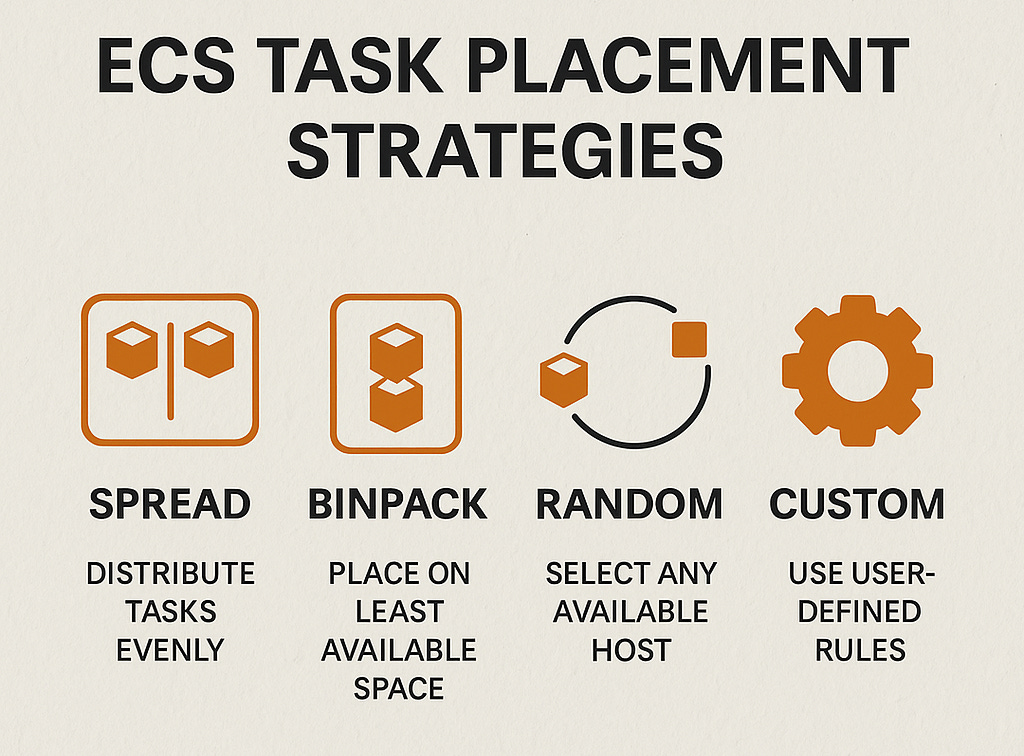

Most engineers consider task placement a simple choice between "spread" and "binpack." This fundamentally incomplete understanding is why so many cloud environments run inefficiently.

ECS Task Placement is built on three distinct mechanisms:

Placement Strategy - The algorithm ECS uses to select instances for task placement

Placement Constraints - Rules that limit where tasks can be placed

Distinctness - Requirements keeping tasks separate from each other

When you only optimize one dimension, you leave substantial cost and performance improvements on the table.

The Hidden Cost of "Default" Spread Placement

The default ECS placement strategy (spread) seems safer at first glance, as it distributes tasks across your cluster. However, this safety comes at a significant cost.

Our 24-instance cluster ran at just 41% capacity utilization because spread placement prioritizes distribution over efficiency. Tasks were thinly spread across instances, leaving substantial unused capacity.

Action item: Check your current utilization with this CloudWatch query:

aws cloudwatch get-metric-statistics \

--metric-name CPUUtilization \

--namespace AWS/ECS \

--statistics Average \

--period 3600 \

--dimensions Name=ClusterName,Value=YOUR_CLUSTER

If your average utilization is below 60%, you're likely overspending.

Multi-Dimensional Strategies: The Optimization Breakthrough

The key insight that transformed our infrastructure was implementing multi-dimensional placement strategies. Rather than choosing a single approach, we implemented a hierarchy of placement considerations.

Our winning combination:

Primary: binpack by memory

Secondary: spread by availability zone

Tertiary: custom attribute constraints

This configuration:

Maximizes resource utilization by filling instances efficiently

Maintains availability by spreading across zones

Satisfies specific workload requirements with custom attributes

Implementation example:

"placementStrategy": [

{ "type": "spread", "field": "attribute:ecs.availability-zone" },

{ "type": "binpack", "field": "memory" }

]

This approach reduced our cluster size from 24 instances to 17 while maintaining the same workload capacity and improving fault tolerance.

Solving the Memory/CPU Imbalance Problem

One of the most common inefficiencies in ECS clusters is the imbalance between memory and CPU utilization. In our environment, we observed:

Cluster memory utilization: 78%

Cluster CPU utilization: 32%

This indicated that our instances were sized for memory requirements while leaving CPU resources largely untapped.

The solution: Implement different placement strategies for different workload types:

Memory-intensive tasks: Use

binpack:cputo fill remaining CPU capacityCPU-intensive tasks: Use

binpack:memoryto fill remaining memory capacity

By categorizing our services by their resource profiles and applying these targeted strategies, we increased overall resource utilization by 22%.

Custom Attributes: The Secret Weapon for Complex Environments

For organizations with diverse workloads, custom attributes provide powerful flexibility in task placement. This approach transformed how we managed specialized workloads like PCI-compliant services and batch processing jobs.

How to implement:

Define attributes during instance registration:

# On instance registration

--attributes=purpose=batch,security=pci-compliant,data-locality=true

Reference these attributes in task placement constraints:

"placementConstraints": [

{ "type": "memberOf", "expression": "attribute:security == pci-compliant" }

]

This allowed us to create logical partitions within our cluster while maintaining high resource utilization across the entire fleet.

The Hidden Risk in Bin Packing

While bin packing dramatically improves resource utilization, it introduces a subtle availability risk: multiple services can go down simultaneously when an instance fails.

Our hybrid solution:

"placementStrategy": [

{ "type": "spread", "field": "attribute:ecs.availability-zone" },

{ "type": "binpack", "field": "memory" }

]

This approach:

First spreads tasks across availability zones for fault tolerance

Then optimizes resource utilization within each zone

Provides a balance between efficiency and resilience

After implementing this hybrid approach, we reduced our mean time to recovery after instance failures by 64%.

Troubleshooting ECS Task Placement Failures

When tasks fail to be placed, most teams immediately assume resource constraints. However, our analysis revealed that attribute mismatches accounted for 73% of placement failures.

Debugging tip: Use the task placement debugger to identify the real cause:

aws ecs describe-task-placement --tasks task-id --cluster your-cluster

Common issues we discovered:

Tasks requiring capabilities not available on all instances

Constraints that were too restrictive, creating artificial capacity limits

Distinctness requirements preventing optimal placement

By systematically addressing these issues, we reduced placement failures by 91%.

The distinctInstance Constraint Trap

One specific placement constraint deserves special attention because of its outsized impact:

"placementConstraints": [

{ "type": "distinctInstance" }

]

This constraint prevents multiple tasks from running on a single instance of the same service. While appropriate for stateful services with high availability requirements, it's often blindly applied to all services.

By removing this constraint from our stateless services, we immediately increased instance density by 47% without compromising reliability.

ECS Capacity Providers: The Evolution of Resource Management

Moving beyond manual instance management, ECS Capacity Providers transformed our approach to scaling:

"capacityProviderStrategy": [

{ "capacityProvider": "high-memory-spot", "weight": 3 },

{ "capacityProvider": "high-memory-on-demand", "weight": 1 }

]

This configuration:

Prioritizes cost-effective spot instances (75% of capacity)

Maintains reliable on-demand instances (25% of capacity)

Automatically scales both pools based on actual placement needs

The result was a $9,240 quarterly savings compared to our previous static instance approach, with a 42% improvement in our ability to handle demand spikes.

Advanced Strategy: The Daemon Placement for Sidecar Tasks

We implemented a specialized optimization using the daemon strategy to monitor agents and sidecar containers.

Previously, we ran monitoring agents as standard tasks, which created unnecessary scheduling overhead and uneven coverage. By switching to daemon placement, we:

Eliminated task scheduling overhead

Ensured perfect coverage across all instances

Improved overall instance resource utilization by 12%

This subtle change improved monitoring and freed up placement capacity for business-critical services.

Data Locality: Reducing Transfer Costs Through Placement

For data-intensive workloads, network transfer costs between availability zones can become significant. We implemented data locality constraints to address this:

"placementConstraints": [

{ "type": "memberOf", "expression": "attribute:data-locality == true" },

{ "type": "distinctInstance" }

]

By ensuring data processing tasks ran in the same zone as their data sources, we reduced inter-AZ data transfer costs by 74% while maintaining fault tolerance through replicated data stores.

Implementing Your ECS Optimization Strategy

Based on our experience, here's a recommended approach to optimizing your ECS environment:

Audit Current Utilization

Collect CloudWatch metrics across your cluster

Identify imbalances between CPU and memory usage

Calculate current cost per container-hour

Categorize Your Workloads

Stateful vs. stateless services

CPU-intensive vs. memory-intensive

Critical vs. non-critical workloads

Implement Multi-Dimensional Strategies

Begin with availability requirements (spread across AZs)

Add an efficiency layer (binpack by primary resource constraint)

Apply custom attributes for specialized workloads

Monitor and Adjust

Track placement success rates

Measure resource utilization improvements

Calculate actual cost savings

The results can be transformative. In our case, this methodical approach saved 47% on infrastructure costs, improved our deployment success rate by 94%, and reduced average service latency by 17%.

Final Thoughts

While the financial impact of optimized task placement is compelling, the operational benefits can be even more valuable:

Improved deployment reliability

Faster auto-scaling responses

Better fault isolation

More predictable performance

ECS task placement isn't just about cost. It's about building a resilient, efficient infrastructure that scales with your business.

SPONSOR US

The Cloud Playbook is now offering sponsorship slots in each issue. If you want to feature your product or service in my newsletter, explore my sponsor page

That’s it for today!

Did you enjoy this newsletter issue?

Share with your friends, colleagues, and your favorite social media platform.

Until next week — Amrut

Get in touch

You can find me on LinkedIn or X.

If you wish to request a topic you would like to read, you can contact me directly via LinkedIn or X.